As a visible project, The Analyst's Canvas is new, but it's been cooking for years. Now comes the fun part: working through the ways to use it. Today, let's talk about raw material: the data that go into the analytic process that leads eventually to information, insight and action.

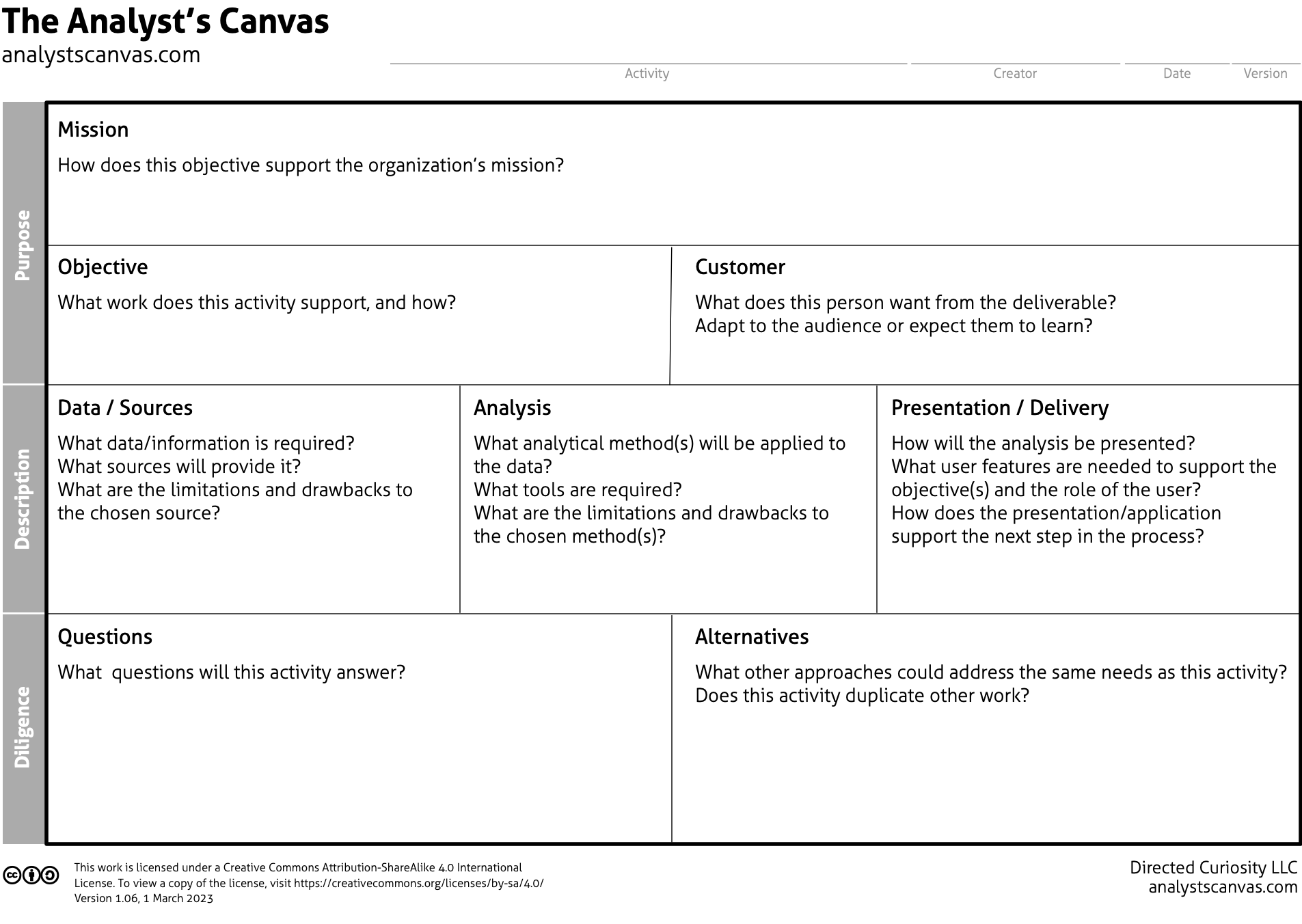

Look at the row of three boxes across the middle of the canvas: data / sources, analysis and presentation / delivery. In 2008, I used that basic outline to describe the building blocks of social media analysis. With the boxes empty, we have a framework to summarize many different tools and approaches. This row is labeled Description.

The big idea of the canvas is to keep analytical work grounded with two of my favorite questions: what are you trying to accomplish, and why? Collectively, the boxes on the Description row characterize an intelligence or analytics process from source data to delivery, whether the finished product is a report, a software tool or something else. The row is below the Objective box as a reminder that the work has to support meaningful objectives.

I suspect that many discussions will take the components of an analytical play as a unit, especially since so many capabilities come packaged as turnkey tools with data, analytics and presentation built in. But whether building a new capability or evaluating an existing one, the three component boxes must be the right choices to support the Objective. It's not enough that the pieces work together, because we run the risk of developing elegant solutions to the wrong problems.

Using the canvas as a prompt

Every box is the canvas includes a set of basic prompts to initiate the exploration. The Data exploration begins with these:

- What data/information is required?

- What sources will provide it?

- What are the limitations and drawbacks of the chosen sources?

- Is this the right source, or is it just familiar or available?

Beyond the prompts, we can use the canvas to ask important questions about our preferred data sources:

- Does this source contain the information needed to support the Objective?

- Does the information from this source answer the questions we need to address?

- What other/additional sources might better answer the questions?

Analyzing the canvas

If you look back at the canvas, you'll see that those questions address the relationship between one box (in this case, Data) and its neighbors (Objective, Questions and Alternatives). Its other neighbor, Analysis, is a special case. Depending on which you consider first, you might ask if the source contains the information needed for your analysis or the analysis is appropriate to the properties of the source.

In the first draft of the Explorer Guide, I included some suggested orders for working through the sections of the canvas for a few scenarios. In this exercise, I'm seeing something different: insights we can gain from the relationships across boundaries within the model. More to come.

From the first time I described the

From the first time I described the  Monitoring social media. Measuring social media. Social media analytics. All of these treat social media as data, but social media generate at least

Monitoring social media. Measuring social media. Social media analytics. All of these treat social media as data, but social media generate at least  Before you can analyze, you need data. In thinking of what you can do with social media data, I find it helpful to think about

Before you can analyze, you need data. In thinking of what you can do with social media data, I find it helpful to think about  In preparing for last month's Social Media Analytics Summit, I needed a talk on the emergence of the social media analytics industry—which was tricky, since I don't usually talk about social media analytics. I didn't want to set up an elimination round of

In preparing for last month's Social Media Analytics Summit, I needed a talk on the emergence of the social media analytics industry—which was tricky, since I don't usually talk about social media analytics. I didn't want to set up an elimination round of